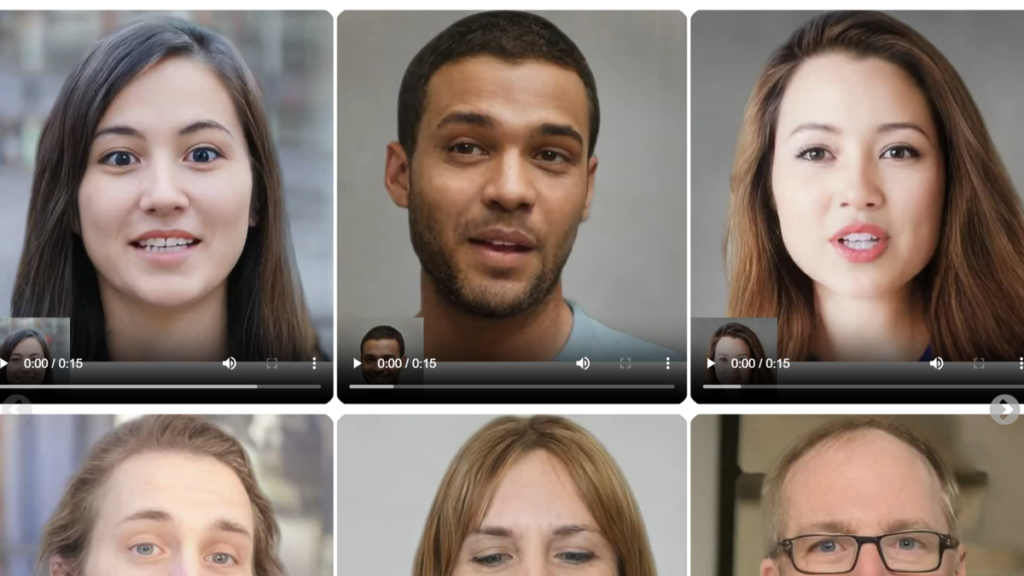

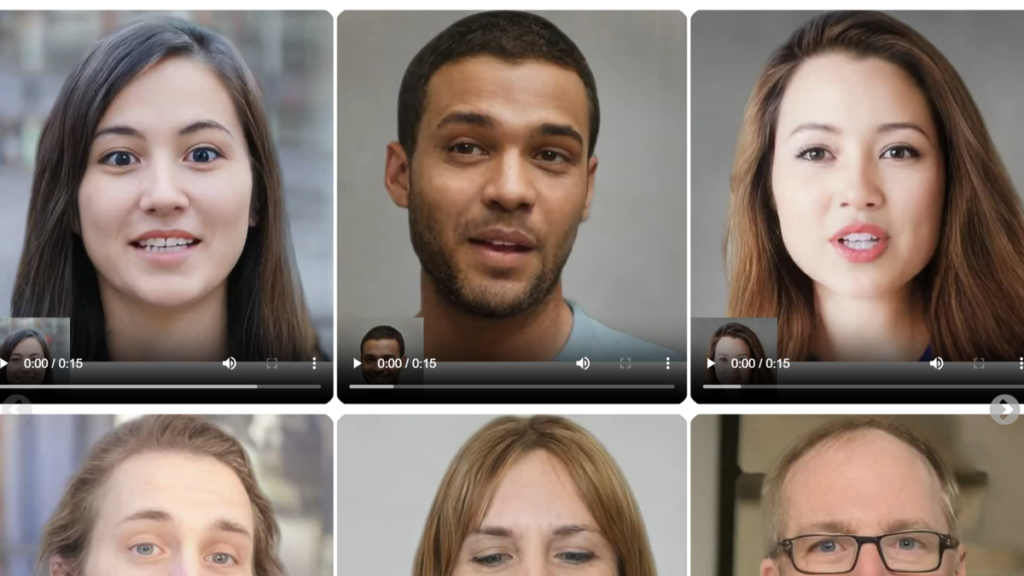

Currently, we have an AI system that can make pictures sing and dance. An AI tool developed by a group of researchers at Microsoft Research Asia has the ability to animate audio files and still photos of individuals. It’s more than just animation, supposedly. Appropriate facial expressions are included, and the output faithfully depicts the subjects of the photos as they sing or speak to the audio track.

A single static image and a voice audio clip can be used to create lifelike talking faces of virtual characters with appealing visual affective skills (VAS) using the newest application, Vasa.

Using movies, the researchers developed an expressive disentangled face latent space, and they claim that the main innovations are a head movement generation model that operates in a face latent space and holistic facial dynamics. Based on comprehensive testing and assessments using a range of novel indicators, the group claimed that their approach might greatly surpass earlier approaches in a number of areas.

What is VASA-1?

According to Microsoft researchers, their new technique can generate a wide range of expressive facial subtleties and lifelike head movements in addition to lip-audio synchronisation.

With VASA-1, researchers set out to do the seemingly impossible: make still images talk, sing, and express emotions in perfect time with any audio file. Their work has resulted in VASA-1, an AI system that synchronises still images—such as photos, sketches, or paintings—into believable animations. According to the researchers, in terms of control, their diffusion model might take into account optional signals such as mood offsets and the primary eye gaze direction and head distance.

How was VASA-1 created?

The researchers have recognized that VASA-1 has the potential to be abused despite the wide range of possible applications. Additionally, they have apparently chosen to prevent public access to VASA-1 as a preventive measure. They understand that in order to prevent any unforeseen effects or misuse, such cutting-edge technology needs to be responsibly stewarded.

The researchers claim that despite the animations’ realistic charm and seamless integration of sound and graphics, a deeper inspection may reveal some minute imperfections and unmistakable indicators of artificial intelligence-generated material.

The research article claims that a thorough training procedure led to VASA-1’s breakthrough. AI systems have to be exposed to thousands of photos that show a variety of facial emotions. The algorithm was apparently able to learn and replicate human speech patterns and the subtleties of human emotions thanks to this enormous amount of data.

The current VASA-1 version produces smooth, high-resolution images at 512 x 512 pixels at a frame rate of 45 frames per second. The processing capacity of a desktop-class Nvidia RTX 4090 GPU is reportedly required to produce these lifelike animations, which takes an average of two minutes.